Many aspects must be considered when developing software, and ensuring everything works as expected can be challenging. Developers and testers can face inconsistent software behavior due to post-release issues. Testers are encouraged about how to write test cases effectively to ensure that every requirement the end user gives is fulfilled.

If the tests cover everything or the scenarios are clear, it can make us less sure how well the software will work. This highlights the importance of creating effective test cases for testing everything properly and catching problems early.

This blog will dive into the lessons on how to write test cases effectively. Let’s start with knowing the basics of test cases, their features, standard format, and how to manage your test case.

For your convenience, we have compiled different test case templates.

Test Case Templates

Transform your XML to TSV effortlessly with our free tool. Click xml to tsv for instant conversion.

What is a test case?

Test cases are like step-by-step instructions in software testing to check if the software features work correctly. They include details about prerequisites, what is needed before testing (pre-condition), and what should happen after testing (post-condition). Poorly constructed test cases can lead to neglecting issues in the software. It’s crucial to have well-defined test cases that cover various scenarios to ensure thorough testing.

A test case is a guide for testers, providing a roadmap for verifying specific functionalities of the software. It highlights the exact steps, the data to be used, and the expected results. This helps in systematically identifying any defects or inconsistencies in the software.

Test cases are carefully written to focus on specific software parts, ensuring they meet the requirements and goals. Here are some essential things to know about test cases:

Manual testing steps: Testers create the test cases and follow the same to check if the software works.

Automated testing with tools or frameworks: Test cases can also be run automatically using automation testing tools and frameworks. The automation testing tools can be selected based on your software’s requirements.

Structure-checking: Test cases give a structured way to check if the software works as it should.

Independent tests: Each test case is separate, so the result of one doesn’t affect another.

Safe testing environment: You can test cases in a controlled space, ensuring everything needed is available without affecting the software used.

Manual testing is time-consuming and leads to more human mistakes. To overcome the challenges faced during manual testing and to speed up the testing process, software organizations are moving towards automated testing.

This can help testers develop and deliver high-quality software to the end users. From the above point, we have noticed that automation testing requires some tools or frameworks to incorporate your test and give you accurate test results. To learn about automation frameworks, you can follow the guide on automation testing frameworks that will help you select the framework that suits your project requirements.

Now that we know what test cases are, let us look into the objective of how to write test cases effectively in the following section.

What are the objectives of writing a test case?

The objectives of writing test cases in software testing are multi-faceted and play an essential role in ensuring the quality and effectiveness of the software development process. Below are the objectives of writing test cases.

Validation of features and functions: It focuses on thoroughly validating specific features and functions of the software, ensuring that they meet the software requirements and work as intended.

Guidance for daily testing activities: It guides testers with a structured approach for their day-to-day testing activities. This guidance ensures that testing efforts are systematic and comprehensive, offering valuable insights to write test cases effectively to validate software features and functions thoroughly.

Documentation of test steps: Test cases create a detailed catalog by documenting each step taken during testing. This catalog becomes a valuable resource for tracking activities and can be revisited when issues or bugs are identified.

Blueprint for future projects: They contribute to building a blueprint for future projects. They serve as a reference point for subsequent testing efforts, enabling efficiency by avoiding the need to start testing from scratch in future testing journeys.

Early detection of usability issues and design gaps: Test cases are instrumental in uncovering usability and design issues at an early stage of development. This early detection allows for timely adjustments, reducing the number of critical problems emerging later in the Software Development Life Cycle (SDLC).

Facilitation of onboarding for new testers and developers: As test cases are well-structured and documented, they facilitate onboarding new testers and developers easily, even if they join ongoing projects. This enables a rapid understanding of testing procedures and smoother integration into ongoing projects.

Writing test cases is not just about verifying software functionality; it also guides testers by documenting activities, providing a foundation for future work, detecting issues early, and facilitating the smooth integration of new team members into the testing process.

Let us now understand the standard format of writing test cases that must be followed when writing a test case.

Need a quick XML to CSV conversion? Use our online tool for free. Access here: xml to csv.

What is the standard format of a test case?

In this section of this blog on how to write test cases effectively, we will explore how to write test cases into the standard structure for documenting test cases, which makes it easier for testers to create, execute, and manage tests consistently. The details we will see below may vary based on the project’s requirements and the complexity of the test case.

Test case ID: This is a combination of numbers and letters unique to each test. It helps organize tests into groups called test suites.,/li>

Test name: A descriptive name that summarizes the purpose of the test case.

Pre-conditions: These things must be ready before starting the test. It could be getting the correct data, setting up the app a certain way, or ensuring everything is prepared.

Test steps/Actions: A step-by-step sequence of actions to be performed during the test, including user interactions.

Test inputs: This consists of the data set, parameters, and variables required for the test case.

Test data: Specific data used in the test case, including sample inputs.

Test environment: Needs to define the details about the test environment, including hardware, software, and configurations.

Expected result: The anticipated outcomes or behavior after executing the test case.

Actual result: The actual outcomes observed during the test execution.

Dependencies: Any external libraries or conditions impacting the test case must be mentioned under dependencies.

Test case author: The person responsible for creating and maintaining the test case.

Status criteria: Criteria used to determine whether the test case is successful (passed) or unsuccessful (failed).

Now that you are familiar with the standard format and what test cases are let us look at the features of test cases.

Common features of a test case

Testers and developers work together to ensure that the software works as expected with top-notch quality and no bugs and does what users want. To do this, testers write test cases to check every vital part of the software. Here are some features to consider when learning how to write test cases.

Thorough coverage: Ensuring all essential aspects of software applications are covered, like reliability, functionality, and usability. This includes scenarios that users might encounter during application usage.

Clarity and simplicity: The test case must be written straightforwardly without getting into too many technical details, making it easier for testers to understand and execute the test case.

Dynamic and updated: Test cases undergo revisions and updates to adapt to changing software requirements, aligning with end-user preferences and organizational priorities.

Sequential organization: In a test scenario, test cases are organized in sequences or groups, with prerequisites of one test case influencing others within the same sequence.

Consistent and reproducible: Test cases must give consistent and repeatable results, ensuring reliability in testing processes. This capability helps identify bugs, verify fixes, and assess new changes.

Specified outcomes: Test cases provide details on expected outcomes and preconditions, establishing a structured framework for testing and ensuring consistent results.

Automation potential: It can be automated for enhanced efficiency, reducing the potential for human error and speeding the testing process.

Types of test cases

Understanding the purpose to write test cases effectively involves considering their various types. The significance of testing cases depends on the testing goals and the characteristics of the software under analysis.

Below are essential insights into the importance of various testing cases, helping select the appropriate type that aligns with your requirement analysis for testing software applications.

- Functional test case: Functional test cases focus on checking if the software’s essential functions align with expectations, and tests are conducted regularly by the QA team with each new feature addition. Functional testing is part of black box testing, which means you don’t have to access the application’s internal structure to perform the test.

Functional testing is a standard step in the QA process during the Software Development Life Cycle(SDLC). Therefore, the QA team creates functional testing cases, which should be repeated whenever new functionality is introduced.

- User interface test case: It verifies how the software looks and works visually. It checks for link errors and the application’s appearance. The testing and design teams work together to ensure the software looks the same on different web browsers.

Different browsers may display the application differently, so UI testing is essential for cross-browser compatibility, ensuring the application maintains a consistent appearance across multiple browsers.

Performance test case: Performance testing checks how well the software works and how fast it responds. For example, it checks how long the application takes to respond after any operation. The testing team usually writes test cases and often automates these tests. They are done to understand how the software performs in real situations and are written when there are specific speed requirements.

Integration test case: Integration testing checks how different software parts work together. Both the development and testing teams work together on these tests.

Usability test case: Usability testing checks how easily users interact with the application. It involves a series of steps for users, like navigating websites or purchasing. You don’t need to know much about the application to write test cases beforehand.

Database test case: Database testing checks how well the database system works. It verifies if the code safely handles data without errors or data loss. SQL queries are often used for these tests.

Security test case: Security testing protects data and finds weaknesses in the software. It checks if the software can handle attacks from inside and outside sources. Testers and developers write test cases, performing password requirements and access control checks.

This involves conducting penetration tests and security-focused assessments such as risk analysis, vulnerability scanning, and threat modeling. Security test cases are created by testers and developers who understand the software application’s database. Some aspects of these tests focus on evaluating password complexity requirements and confirming access controls and permissions.

- User acceptance test case: User acceptance testing considers the user’s perspective to ensure the software meets their expectations. It checks whether the software meets acceptance criteria, covering all application parts.

Convert Binary to Hex seamlessly with our free converter. Start now: binary to hex.

How to write a good test case?

In the software development lifecycle, creating good test cases is essential. It is vital to conducting successful tests and achieving bug-free software applications.

Some key points focus on writing good test cases:

Ease of maintenance: Writing test cases may be time-consuming, especially when applications are under test. Good test cases are crucial as they can be easily maintained and reused, saving time and effort.

Adaptability: To avoid updating the entire test suite for each new feature, practicing writing non-specific test cases, especially those related to user interface changes, is beneficial. This practice saves time and reduces errors in testing cases.

Time savings for critical testing: Time saved in writing test cases can be redirected towards identifying and testing edge cases. This ensures the quality of software applications and contributes to an enhanced end-user experience.

Maintaining application stability: Good testing cases play a crucial role in maintaining the stability of applications, mainly when introducing new features. They help ensure that additions do not negatively impact the application’s function, reducing the risk of regression errors and making it more stable and reliable for end-users.

Early bug identification: Well-written test cases contribute to the early identification and elimination of application bugs. This proactive approach minimizes the risk of high costs associated with rework and prevents delays in the development process.

Now that you have explored the key points of writing a good test case, you may be curious about how to write test cases effectively. The steps and key points to write test cases effectively will be discussed in more detail in the section below.

How to write test cases effectively?

Writing effective test cases is critical to ensuring thorough and successful testing within the software development lifecycle. Here are key considerations for writing test cases that provide optimal results.

Thoroughness: Writing test cases effectively goes beyond the basics, exploring various scenarios, including edge cases and potential error situations. Thorough testing helps uncover hidden issues that might not be identified in routine scenarios.

Efficiency: Efficiency is important when you write test cases. Prioritize testing areas more likely to have defects or a significant impact, optimizing the testing process in terms of time and resources.

Early detection: Design test cases so that it helps detect defects early in the development process. Identifying issues early minimizes the cost and effort required to fix problems later.

Adaptability: To write test cases effectively, you should adapt to software changes, such as updates or adding new features. They need to be flexible and easily adjustable to accommodate evolving requirements.

Clear documentation: Document the test cases very clearly so that team members, including new additions, can easily understand and execute the test cases.

Comprehensive coverage: Ensure to write test cases effectively to provide comprehensive application coverage, addressing critical functionalities and various usage scenarios.

Scenario-based testing: Conduct the test cases based on real-world scenarios to mimic user interactions with the software. This approach helps identify potential issues that users might encounter in practical usage.

Data-driven testing: Incorporate data-driven testing methodologies to assess how the application handles different inputs and datasets. This helps in evaluating the robustness of the software.

By following these principles, understanding how to write test cases effectively becomes a strategic attempt to deliver a thorough and efficient testing process for software applications. As we continue learning from this blog on how to write test cases, we will discuss the lessons to consider when writing test cases effectively.

Simplify your JSON to CSV conversions with our easy-to-use tool. Try it: json to csv.

Lesson on how to write test cases effectively

In this section, we will learn some of the best practices to write test cases effectively that will help testers and developers deliver quality software and meet the requirements of their end-users.

Stick to the scope and specification

Understanding the scope and purpose of writing test cases is crucial. In the past, assumptions about how a test case should work led to challenges. Learning from experience, it became clear that having a solid grasp of the Software Requirement Specification (SRS) document is essential. Refraining from relying on intuition instead of a logical approach can sometimes lead to incorrect assumptions.

Let us understand this with a simple scenario.

- Scenario:

Suppose you are given a chance to test a fund transfer feature of a mobile banking app without thoroughly reading the software requirement specification. In that case, you assume users can only transfer funds between their accounts within the same bank.

- Reality check:

When examining the SRS closely, you can see that the client’s requirement includes enabling users to transfer funds to accounts in other banks. Based on the scenario above, the assumption needed to be corrected, as the client mentioned the cross-bank transfer capability in the SRS.

- Lesson Learned:

It’s easy to assume features and functionalities when creating test cases, but this can steer you away from client requirements. This can impact the product being tested and the relationship with the client organization.

Be mindful of the product updates

Understanding the software requirement specification is crucial for practical testing. However, if the software version is outdated, sticking to SRS is optional. It doesn’t make sense to test features that are no longer relevant or have been deprecated.

As the world of technology evolves, software development and testing approaches are also enhanced to make the testing process faster and more efficient. The initial testing model was a waterfall model, and as time passed by, the challenges of the waterfall model were overcome with the new testing model, which is the V model. Currently, many software organizations use the Agile Model, an improvised version of the waterfall and V models.

Agile methodologies dominate product development, emphasizing quick and adaptive processes. To understand this better, let’s take a scenario.

- Scenario:

Suppose you are involved in testing an eCommerce website. The original software requirement specification highlights a checkout process involving multiple payment steps. As the testing methodology evolved to an agile model, the organization planned to revamp the checkout process to make it more streamlined and user-friendly.

- Documentation Updates

While the original SRS remains a valuable reference, the testing team updates its documentation to reflect the current state of the application. This ensures that testing efforts align with the latest changes made during the Agile development cycle.

Write to-the-point descriptions

A test case description is pivotal in identifying a bug’s root cause, highlighting the necessity of including steps for reproduction. In the early stages of the testing journey, a common mistake was being excessively detailed, assuming that more information was always better. However, the lesson learned emphasized the importance of clarity. Writing clear, direct, and informative descriptions is crucial, avoiding unnecessary elaboration. The focus should be on straightforward communication.

It is advisable to include only essential and valid steps in test cases. Lengthy test cases risk losing focus and clarity, so each test case should aim for a single expected result to maintain simplicity. For instance, if multiple test cases involve common actions, incorporating the test case ID in the prerequisite can help identify the important test case.

To understand it better, let’s look at the example of writing a clear and direct test case.

Example:

Test Case ID: TC001

Description: Validates the functionality of the ‘Login’ button.

Steps:

Open the application login page.

Enter valid credentials.

Click the ‘Login’ button.

Expected Result: Ensures successful user login.

Put yourself in the customer’s shoes

An end-user reaches out to customer support, expressing discontent with a software feature that fails to meet their expectations.

The software tester must convey the customer’s perspective to the development team and ensure the customer query is satisfied based on the requirement.

While writing test scenarios for customer satisfaction, developers and testers must keep the end-user needs in mind because the software product is designed for the customer, along with maintaining the usability testing and accessibility testing of the software product.

Streamline your data with our XML Stringify tool. Click xml stringify for fast formatting.

User personas

A user persona is a fictional representation of an end-user, offering insights into how individuals with various job roles interact with the software. If someone is unfamiliar with user personas, they might question the need to create imaginary characters to write test cases effectively.

To illustrate the significance, let’s consider Jack as an example. Jack, a web developer, uses cloud-based testing platforms like LambdaTest for cross-browser testing to assess how web elements appear across different browsers for his websites or mobile applications. In this context, Jack is primarily concerned with frontend functionalities and doesn’t delve into backend processes like API communication or activities like database testing, security testing, etc.

To enhance the process of writing test cases effectively, it’s valuable to establish different user personas, each representing a specific audience community and their professions. By doing so, the focus shifts towards creating test cases that address the particular needs of each user group. This approach ensures a more targeted and comprehensive testing strategy aligned with the diverse requirements of the software’s user base.

Be granular while writing down the steps for execution

When writing test cases effectively, it’s crucial to provide detailed yet straightforward instructions for smooth execution, especially for new testers. Clearly stating the aim and scope of each test case enhances understanding and must be self-explanatory. All necessary prerequisites, including test data, should be highlighted within the test case itself. Peer review is essential for maintaining quality.

Avoiding composite sentences to ensure clarity in executing test cases is advisable. Instead, create a test case walkthrough with a concise and specific step-by-step guide.

For example, consider a test case for cross-browser testing:

Log in to www.lambdatest.com.

Navigate to the Real Time section.

Choose testing configurations, including Browser, Version, OS, and Screen Resolution.

Initiate the test by clicking the START button.

Scroll from the top to the bottom of the webpage.

Verify support for all icons and paddings.

Change the resolution display to check for compatibility with different screen sizes.

Terminate the testing session.

It’s easy to follow when you break down steps to the most granular level, as demonstrated in the above test case, contributing to the effectiveness of the test case. This approach ensures that even new testers can easily comprehend and execute each step.

Classify test cases based on business scenarios and functionality

This approach provides a structured framework for developing and managing test cases, allowing for a thorough examination of the system from diverse perspectives. The objective is strategically determining which tests to create and when to create them, promoting a targeted and purposeful testing strategy.

By classifying test cases according to business scenarios, you gain insights into how the system aligns with real-world use cases. This approach ensures that tests are designed to simulate scenarios that end-users will likely encounter, promoting a more realistic evaluation of the system’s performance and functionality.

Similarly, organizing test cases based on functionality allows for a systematic assessment of each component or feature within the system. This method helps identify specific functionalities that require in-depth inspection, ensuring that the inconsistency of each feature is thoroughly examined. By breaking down the system into its integral parts, testing efforts can be targeted toward areas critical to the application’s overall performance and reliability.

Convert CSS to SASS easily with our online converter. Get started: css to sass.

Take ownership of your test cases

Observations have been made regarding juggling test cases without clear ownership among a pool of software testers involved in large projects. It points out that appropriate distribution of test cases is essential in such scenarios. Each software tester should take responsibility for the test cases assigned to them.

The concept of “product ownership” is defined as a product’s entire software testing life cycle. It means tracking how test cases perform when used by actual users after execution and with each software update. It involves observing how well the test cases work in real-world situations over time. This includes reviewing performance statistics and contributing proactive ideas to enhance the team’s overall user experience.

Prioritize your test cases

Test case prioritization involves systematically ranking test cases based on their importance. This process is pivotal in addressing two crucial constraints in software testing — time and budget — aiming to enhance fault detection efficiency.

The approach towards test scenarios was disorganized, with little recognition of prioritization’s role in writing effective test case management.

An impactful lesson was learned during a specific release cycle when bandwidth was limited, and the looming release date necessitated swift action. Prioritizing high-priority test cases became essential. However, a post-release scenario revealed a need for a rollback due to customer-reported failures. This experience underscored the critical importance of concurrently prioritizing test cases while engaging in the process to write test cases effectively.

Regularly review & update test cases

Regular reviews and updates of test cases are essential to ensure they accurately represent the current state of the software and identify areas for potential improvement. As the software changes, such as the addition of new requirements or modifications to existing ones, it is crucial to update test cases accordingly. This practice guarantees that test cases remain up-to-date, offering clear and relevant information during testing.

Collaboration with developers

Efficient collaboration with developers, product managers, and various stakeholders is vital. It involves sharing test cases and seeking feedback to align them with the latest requirements and expectations. This iterative process plays a key role in enhancing the efficiency and effectiveness of test cases.

Actively use a test case management tool

Test case management tools are essential for keeping a stable release cycle. They help everyone know who’s working on what and track deadlines for bug fixes. But, many employees need to use these tools better. You must understand how to use your test case management tool to write test cases.

Spreadsheets work for small teams but become a hassle as your team grows. Using tools like TestRail can help manage your test cases.

LambdaTest is more than just a testing platform; it allows you to make your testing process even more effective by providing integration with over 120+ tools, and one among them is e TestRail, which helps you manage your test cases. By integrating TestRail with LambdaTest, teams can enhance their test case organization, tracking, and execution processes, improving overall test management efficiency. To learn more about integration, follow the LambdaTest integration document.

Another point in writing test cases effectively is to track, maintain, and automate them. You’ll eventually need to hunt for a dedicated test case management application that suits your needs.

Monitor all the test cases

Test monitoring involves evaluating testing activities and efforts to assess progress, track test metrics, and estimate future actions. This process aims to provide the relevant team and stakeholders feedback about the ongoing testing process.

When multiple software testers, especially those working remotely or on a shared project, may encounter similar test cases, it becomes crucial to monitor all test cases. Additionally, it is essential to ensure the removal of irrelevant and duplicate test cases for the effectiveness of test case writing.

Aim for 100% test coverage

Attempting for 100% test coverage is a significant milestone in software testing. Achieving this means developing a comprehensive set of tests that covers every line of code in the program. This ensures a thorough examination of the software’s functionality and components.

It’s important to understand that 100% test coverage doesn’t guarantee flawless code but indicates that the tests have touched all lines of code. Well-structured tests enable the prediction of how specific inputs will impact the program’s output.

Aiming for 100% test coverage is challenging when writing test cases effectively. Test cases should be carefully planned to cover every component and function specified in the Software Requirements Specification (SRS) document.

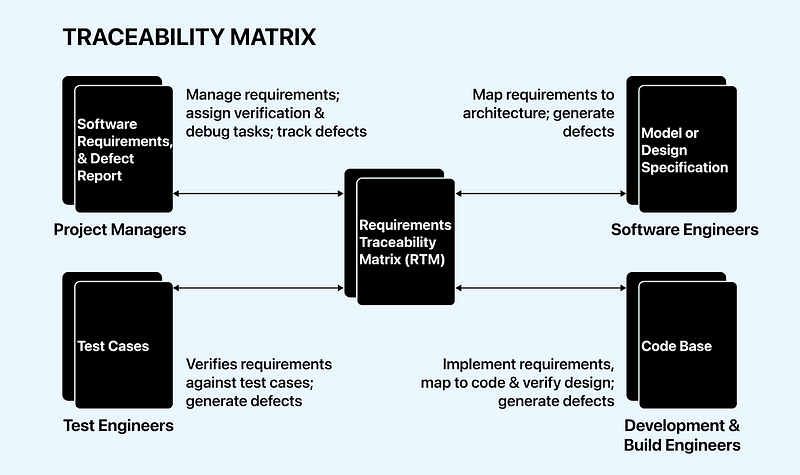

A traceability matrix can be used to ensure thorough coverage. This matrix acts as a map between test cases and requirements, verifying that no functions or conditions are left untested. It becomes a valuable tool for achieving 100% test coverage, offering a systematic approach to tracing and validating testing efforts.

Beware of dependent test cases

When the behavior or outcome of one test case relies on the execution or result of another, it’s termed test case dependence. In such instances, bugs may surface in seemingly random scenarios, and replication might not proceed as planned. This situation highlights the importance of acknowledging that test cases can indeed depend on each other.

For example, there might be a test case (let’s call it X) that can only be executed after performing test cases Y and Z sequentially. This commonly occurs when dealing with non-mutually exclusive modules. A bug may only manifest if a scenario is drafted after identifying and executing the dependent test cases. It highlights the need to be aware of and manage dependencies among test cases for a thorough and effective testing process.

Be the critic

In software testing, it’s sometimes crucial to adopt unconventional approaches to uncover unknown scenarios is sometimes crucial. These unknowns are situations that remain unnoticed by the product team until end-users report them.

After thoroughly reviewing all test cases for a specific scenario, testers should revisit them, wearing the hat of a tester rather than just a test case writer. This shift in perspective is essential for writing effective test cases, mainly when aiming for exploratory testing. Thinking differently and approaching the test cases exceptionally helps identify potential issues or scenarios that might have been overlooked during the initial review.

Be intent-specific

Human actions are typically guided by plans, and the same holds for software testing. Realizing the importance of acceptance criteria plays a pivotal role in crafting test cases that serve their purpose effectively.

Acceptance criteria refer to the conditions that verify whether the software functions as intended from the end-user’s perspective. It’s important to note that these criteria are steps and serve as a guide to assess the end-user’s intent.

For instance, acceptance criteria focus on broader user expectations instead of detailing specific steps like visiting a team page and clicking buttons. An example could be, “An administrator should be able to invite or remove team members working on the same project under an organization.” This approach ensures that test cases align with the user’s intent rather than being overly prescriptive about the steps involved.

Negative test scenarios in software testing

While negative testing remains a widely recognized technique, its effectiveness is in sticking to specific principles throughout the planning and execution of negative test scenarios. The following guidelines can assist software testers in effectively planning, creating, and executing negative test scenarios.

Organize Negative Test Scenarios: Create a dedicated folder for negative test scenarios within the project. This makes it easier to access and manage these scenarios separately.

Plan Early for Negative Scenarios: Think about negative scenarios early in the project. This proactive approach saves time, energy, and money, providing confidence before the project launches.

Use a Simple Folder Structure: Within the negative test scenarios folder, create sub-folders for each functionality or flow in the system. This helps organize and understand the scenarios easily.

Include References: Always use references such as ticket numbers, testing types, tags, and labels to indicate that a test case focuses on negative scenarios. This aids in planning future testing executions.

Consider Automation Potential: Negative scenarios like positive test cases can be automated. Identify automation candidates among negative scenarios, marking them appropriately for test automation engineers.

Cover Extreme User Activities: Include scenarios for extreme user activities, like attempting to submit an empty form or exploring an empty page state. Provide illustrations and informative text for clarity.

Discuss Negative Testing Terms: Engage with the team and the client to discuss and agree on the terms of negative testing. Since negative testing requires additional time, clarify when and how it will be performed.

Avoid Reinventing the Wheel: If there’s no test management tool in the project, but there are existing rules for tracking testing results, adhere to those rules. Instead of creating a new tracking system.

Cross browser testing can help minimize outages

Understanding and noting browser differences is crucial when writing test cases effectively. A noting incident occurred in an organization that highlighted the impact of overlooking this aspect. During an unexpected outage, their payment pages displayed chaotically for end-users despite exhaustive efforts from developers, including cache clearing and server reboots.

Upon closer inspection, a pattern emerged among affected users — using outdated IE browsers or specific Android devices from a particular vendor. This realization underscored the importance of acknowledging website incompatibility with different browsers and devices. Subsequently, a practice was adopted to conduct cross-browser testing in every release cycle to prevent the recurrence of such embarrassing situations.

The organization used the LambdaTest platform for effective cross-browser testing, which helped them rectify cross-browser compatibility issues. The convenience of cloud-based testing allows users to log in and initiate live testing from any location, at any time, and on any system.

Lean on automation

The emergence of progressive enhancement and the widespread adoption of Agile methodologies have elevated the significance of regression testing, turning it into an urgent need and a common source of frustration.

The solution lies in implementing an effective test automation strategy. For those currently conducting manual testing, contemplating a shift towards automation testing is worth considering.

The introduction of automation testing has proven to be a game-changer, particularly in the context of regression testing. Automation ensures a bug-free application, boosts productivity and frees up bandwidth for software testers. This newfound capacity allows testers to explore innovative approaches to writing effective test cases instead of being confined to repetitive manual testing routines.

Automation testing becomes even more noticeable when paired with advanced tools and platforms. Moving to a cloud-based solution, such as LambdaTest, not only enhances the efficiency of automated testing but also introduces scalability and flexibility, encouraging software testers to optimize their strategies and explore innovative approaches to creating effective test cases.

LambdaTest is an AI-powered test orchestration and execution platform that lets you run manual and automated tests at scale with over 3000+ real devices, browsers, and OS combinations. It facilitates thorough coverage by providing a wide range of real browsers and devices for testing, ensuring reliability and functionality. Its simplicity in setting up and executing tests makes it suitable for dynamic and updated test cases.

LambdaTest’s organized test suites and consistent results contribute to sequential organization and reproducibility. Moreover, the platform supports automation for both web and mobile applications, enhancing efficiency and reducing the potential for human error in the testing process. Running tests over a platform can help you deliver quality software.

You can also subscribe to the LambdaTest YouTube Channel and stay updated with the latest tutorials and updates on Web application testing, Selenium testing, Playwright testing, Cypress testing, and more.

Test case documentation

Creating a flawless test document can be challenging in software testing, and rushing into test documentation without properly considering the scenario leads to the delivery of failed software. Before initiating the documentation process, testers must comprehend the purpose to write test cases effectively.

Maintaining simplicity and clarity in test instructions is important. The goal is to facilitate testers in easily completing the testing process by following the outlined instructions for each test. Several key considerations are recommended to excel in testing documentation:

Satisfactory Structure: Ensure the test document is well-structured.

Addressing Negative Test Cases: Respond to negative test cases for comprehensive testing.

Adopting Atomic Test Procedures: Break down test procedures into atomic, manageable units.

Prioritizing Tests: Prioritize tests to allocate testing resources efficiently.

Considering Sequence: Sequence matters; organize tests logically.

Maintaining Separate Sheets: Keep distinct sections for ‘Bugs’ and ‘Summary’ in the document.

A common observation highlights the tendency for the entire team to fixate on the test case document once it receives client sign-off. This fixation limits creative thinking to write test cases effectively, pointing to the need to ensure comprehensive coverage within the test document.

Conclusion

In conclusion, to write test cases effectively is a fundamental aspect of the software development life cycle, serving various objectives. We have also learned that the standard format includes essential elements like Test Case ID, Test Name, Test Steps, Expected and Actual Results, and more.

Along with test case features and more, we have also explored what are good test cases and what to be considered when you write test cases effectively, and at the end, we were able to consolidate the lessons learned by following best practices, including scenario-based and data-driven testing, establishes a strategic approach to write test cases, ultimately contributing to bug-free software and meeting end-user expectations.